|

easykf-2.04

|

|

easykf-2.04

|

Two algorithms are implemented and all of them taken from the PhD of Van Der Merwe "Sigma-Point Kalman Filters for Probabilistic Inference in Dynamic State-Space Models" :

To use the library, you simply need to :

: ukf_*_iterate()

: ukf_*_iterate()free the memory : ukf_*_free() To use these functions, you simply need to define you evolution/observation functions, provided to the ukf_*_iterate functions as well as the samples.

Warning : Be sure to always use gsl_vector_get and gsl_vector_set in your evolution/observation functions, never access the fiels of the vectors with the data array of the gsl_vectors.

For UKF for parameter estimation, two versions are implemented : in case of a scalar output or vectorial output. The vectorial version works also in the scalar case but is more expensive (memory and time) than the scalar version when the output is scalar.

For the scalar version :

Examples using the scalar version :

For the vectorial version :

Examples using the vectorial version :

The Joint UKF tries to estimate both the state and the parameters of a system. The structures/methods related to Joint UKF are :

In addition to a g++ compiler with the standard libraries, you also need to install :

The installation follows the standard, for example on Linux : mkdir build cd build cmake .. -G"Unix Makefiles" -DCMAKE_INSTALL_PREFIX=<the prefix="" where="" you="" want="" the="" files="" to="" be="" installed>=""> make make install

It will compile the library, the examples, the documentation and install them.

Running (maybe several times if falling on a local minima) example-001-xor, you should get the following classification :

An example set of learned parameters is :

x[0] – (9.89157) –> y[0]

x[1] – (4.18644) –> y[0]

Bias y[0] : 8.22042

x[0] – (10.7715) –> y[1]

x[1] – (4.18047) –> y[1]

Bias y[1] : -8.70185

y[0] – (6.9837) –> z

y[1] – (-6.83324) –> z

Bias z : -3.89682

The transfer function is a sigmoid :

Here we use a 2-12-1 MLP, with a sigmoidal transfer function, to learn the extended XOR problem. The transfer function has the shape :

The classification should look like this :

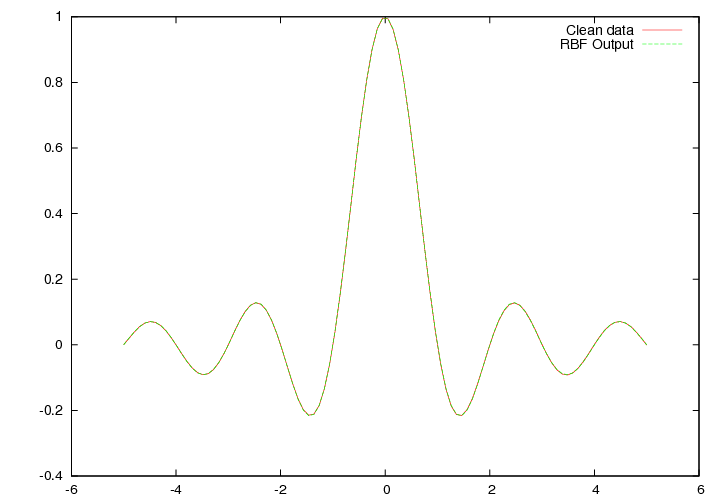

In this example, we use a RBF network with 10 kernels to approximate the sinc function on [-5.0,5.0] To make the life easier for the algorithm, we evenly spread the centers of the gaussians on [-5.0, 5.0].

The results are saved in 'example-003.data', the first column contains the x-position, the second column the result given by the trained RBF and the last column the value of sinc(x)

In this example, we learn the two outputs (x,y) from the inputs (theta, phi) of the Mackay-robot arm dataset. For this we train a 2-12-2 MLP with a parametrized sigmoidal transfer function.

We use here UKF for parameter estimation to find the minimum of the Rosenbrock banana function :

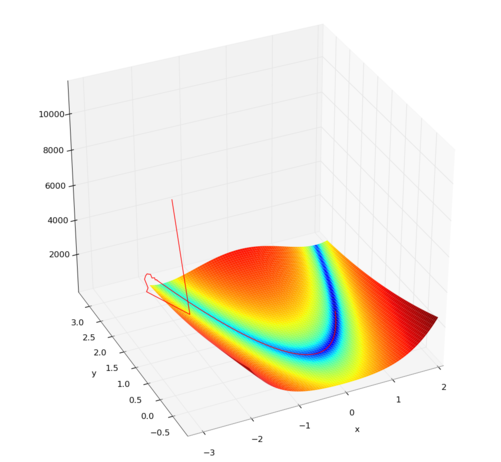

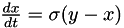

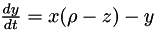

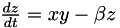

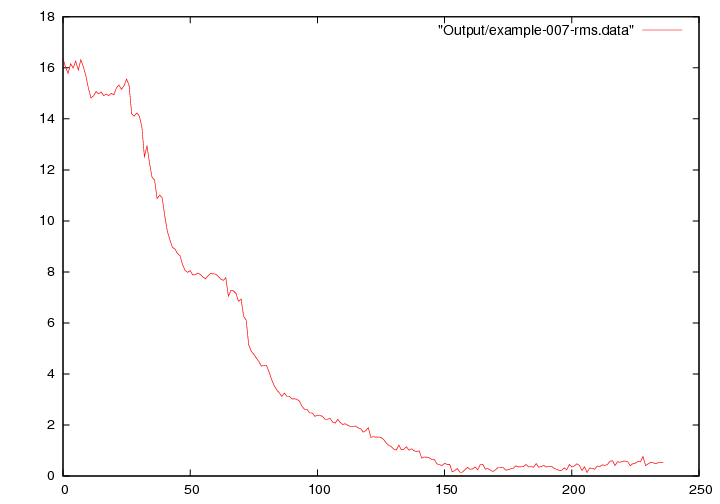

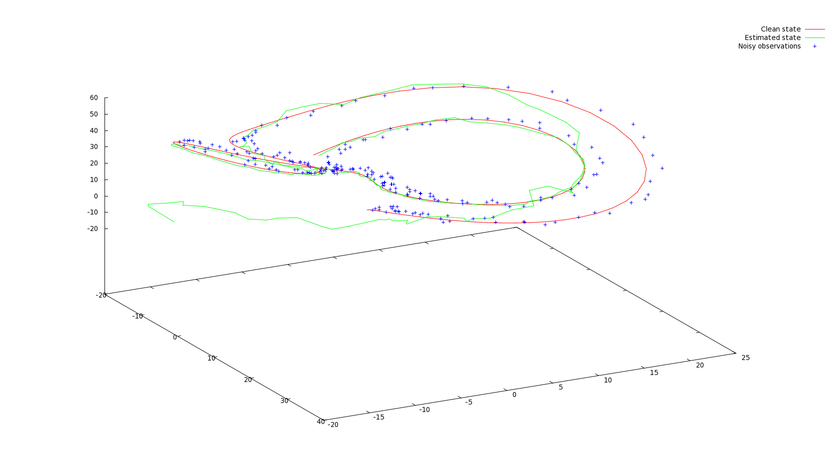

In this example, we try to find the parameters (initial condition, evolution parameters) of a noisy lorentz attractor. The dynamic of the lorentz attractor is defined by the three equations :

While observing a noisy trajectory of such a Lorentz attractor, the algorithm tries to find the current state and the evolution parameters  . The samples we provide are

. The samples we provide are  .

.

To clearly see how UKF catches the true state, we initialized the estimated state of UKF to -15, -15 , -15

1.8.6

1.8.6